CAMPUSLIFEHealth and Technology

Together with Dartmouth University, Carnegie Mellon University and Cornell University, we at Georgia Tech are interested in extending the seminal work of StudentLife, started by Dartmouth’s Andrew Campbell a few years ago. Campbell sought to determine if mental health and academic performance could be correlated, or even predicted, through a student’s digital footprint. We are proposing the CampusLife project as a logical extension, which aims to collect data from relevant subsets of a campus community through their interactions with mobile and wearable technology, social media and the environment itself using an Internet of Things instrumentation. What drives this project is both a human goal of understanding wellness for young adults, as well as how one can perform such experimentation and address the significant security and privacy challenges. We invite everyone from the Georgia Tech community to join a conversation with the lead researchers from four universities to help influence the directions of this nascent effort.

For more information, contact Vedant Das Swain.

COSMOSHuman-Environment Interactions Computational Materials

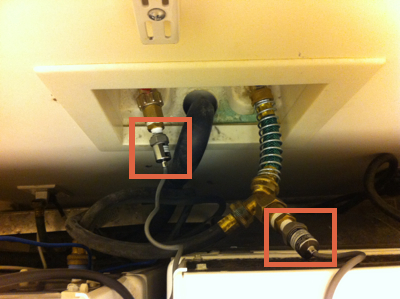

COSMOS is an interdisciplinary collaboration to design, manufacture, fabricate, and apply COmputational Skins for Multi-functional Objects and Systems (COSMOS). COSMOS consist of dense, high-performance, seamlessly-networked, ambiently-powered computational nodes that can process, store, and communicate sensor data. Achieving this vision will redefine the basis of human-environment interactions by creating a world in which everyday objects and information technology become inextricably entangled. COSMOS seeks to rethink the embodiment of computing through the integration of materials science and computation, literally realizing Weiser’s figurative “weaving” of technology into the fabric of everyday life.

For more information, please visit https://cosmos.gatech.edu or contact Dingtian Zhang.

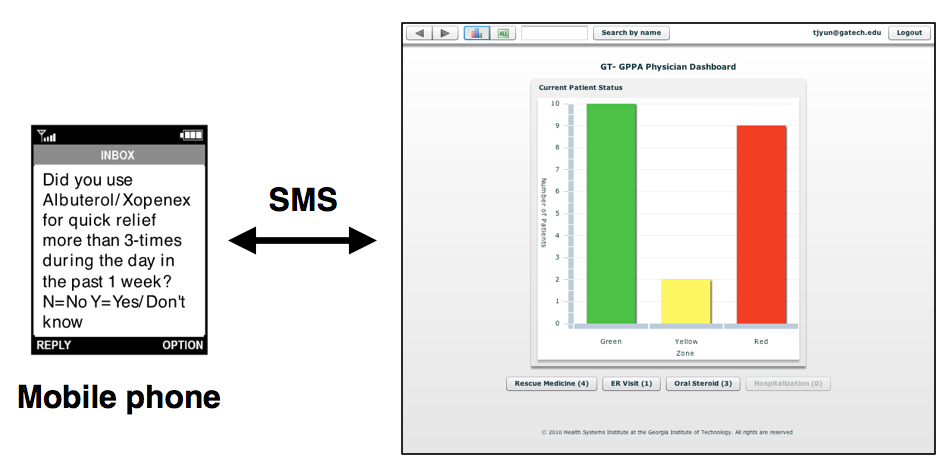

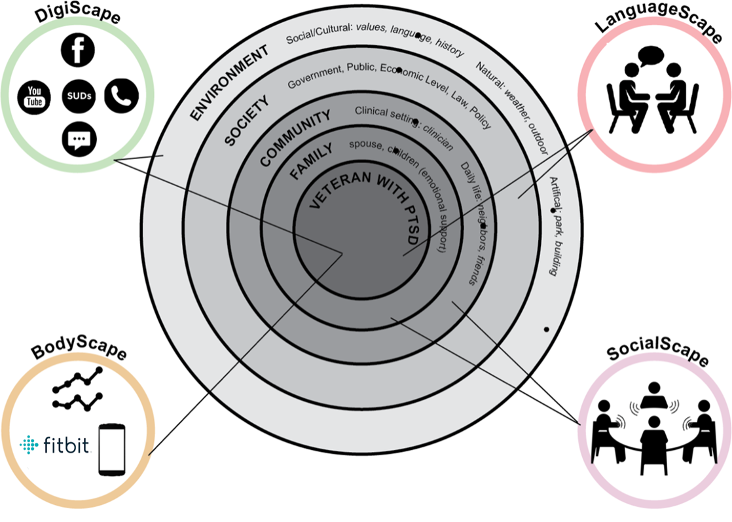

Prolonged Exposure Collective Sensing System (PECSS)Mental Health Post-Traumatic Stress Disorder (PTSD)

We are conducting research at the intersection of mental health management and computer science. Our project, Prolonged Exposure Collective Sensing System (PECSS) seeks to address some of the pressing clinical challenges inherent in PTSD therapy. It leverages ubiquitous computing, human computer interaction and machine learning. PECSS is a novel, user-tailored sensing systems that allows patient data transfer and information extraction during therapeutic exercises, it also has interfaces (mobile application and dashboards) for monitoring this information. In the next two year we will develop, validate, and deploy computational models of heterogeneous, PE related sensor data that will support and facilitate the improvement of PTSD treatment delivery and effectiveness for clinicians and patients. This work is funded by the National Science Foundation, in collaboration with Emory University and University of Rochester.

For more information, please contact Rosa Arriaga.

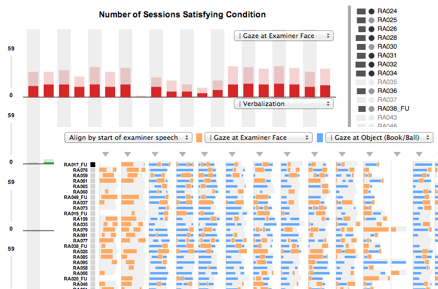

Computational Behavioral Science and AnalysisAutismChronic Diseases

A collaboration between developmental psychologists and computer scientists seeks to develop novel computational tools and methods to objectively measure behaviors in natural settings. The goal is to develop tools that can help us better detect, understand, and ultimately treat autism or other chronic diseases. Current standard practices for extracting useful behavioral information are typically difficult to replicate and require a lot of human time. For example, extensive training is typically required for a human coder to reliably code a particular behavior/interaction. Also, manual coding typically takes a lot more time than the actual length of the video. The time intensive nature of this process puts a strong limitation on the scalability of studies.

For more information, contact Agata Rozga, Rosa Arriaga, and Gregory Abowd.

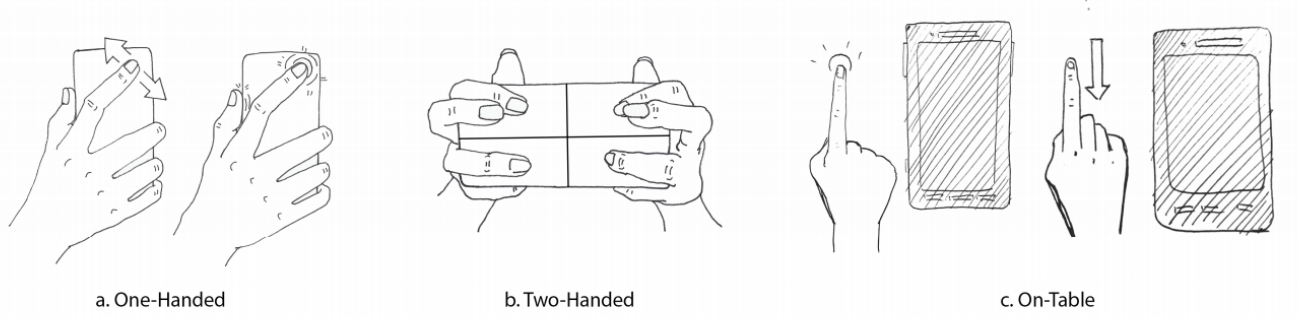

Activity and Gesture Recognition for Mobile and Wearable ComputingActivity RecognitionGesture Recognition

Over the past few years, we have seen a number of wearable devices emerge that did a small number of tasks well (e.g., step counting). As these wrist-worn health trackers gained in popularity, commercial devices sought to do even more things around the wrist, to the point where a smartwatch is trying to become an all-purpose interaction device. Our group is working on a variety of explorations of mobile and on-body activity and gesture recognition systems, using both commodity sensing in existing devices and new form factors with novel sensing. Our goal is to expand the richness of existing interactions and activity recognition capabilities for everyday mobile and wearable computing users.

For activity recognition and passive sensing systems, reach out to Hyeokhyen Kwon.

Eating DetectionHealth and Technology

Chronic and widespread diseases such as obesity, diabetes, and hypercholesterolemia require patients to monitor their food intake, and food journaling is currently the most common method for doing so. However, food journaling is subject to self-bias and recall errors, and is poorly adhered to by patients. This project explores the different ways eating episodes can be recognized as well as the potential applications of being able to recognize these episodes.

For more information, please refer this page or contact Mehrab Bin MorshedSecurity and PrivacySecurityPrivacy

Security and privacy help realize the full potential of computing in society. Without authentication and encryption, for example, few would use digital wallets, social media or even e-mail. The struggle of security and privacy is to realize this potential without imposing too steep a cost. Yet, for the average non-expert, security and privacy are just that: costly, in terms of things like time, attention and social capital. More specifically, security and privacy tools are misaligned with core human drives: a pursuit of pleasure, social acceptance and hope, and a repudiation of pain, social rejection and fear. It is unsurprising, therefore, that for many people, security and privacy tools are begrudgingly tolerated if not altogether subverted. This cannot continue. As computing encompasses more of our lives, we are tasked with making increasingly more security and privacy decisions. Simultaneously, the cost of every breach is swelling. Today, a security breach might compromise sensitive data about our finances and schedules as well as deeply personal data about our health, communications, and interests. Tomorrow, as we enter the era of pervasive smart things, that breach might compromise access to our homes, vehicles and bodies.

We aim to empower end-users with novel security and privacy systems that connect core human drives with desired security outcomes. We do so by creating systems that mitigate pain, social rejection and fear, and that enhance feelings of hope, social acceptance and pleasure. Ultimately, the goal of the Ubicomp Group/SPUD Lab is to design new, more user-friendly systems that encourage better end-user security and privacy behaviors.

For more information, contact Youngwook Do.